Video decoding pipeline#

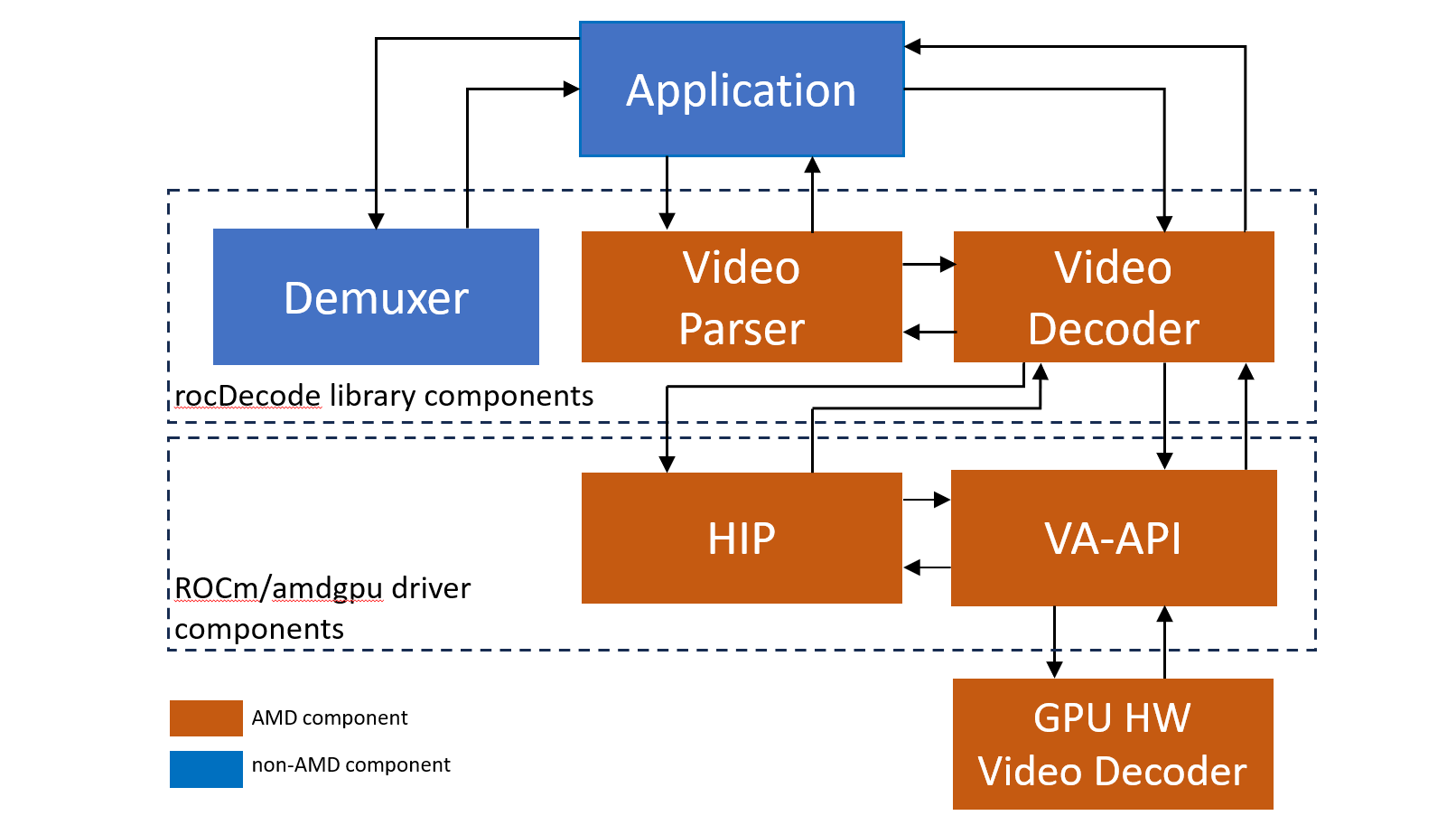

There are three main components in rocDecode:

Demuxer: Our demuxer is based on FFmpeg, a leading multimedia framework. For more information, refer to the FFmpeg website.

Video parser APIs

Video decoder APIs

rocDecode follows this workflow:

The demuxer extracts a segment of video data and sends it to the video parser.

The video parser extracts crucial information, such as picture and slice parameters, and sends it to the decoder APIs.

The hardware receives the picture and slice parameters, then decodes a frame using Video Acceleration API (VA-API).

This process repeats in a loop until all frames have been decoded.

Steps in decoding video content for applications (available in the rocDecode Toolkit):

Demultiplex the content into elementary stream packets (FFmpeg)

Parse the demultiplexed packets into video frames for the decoder provided by rocDecode API.

Decode compressed video frames into YUV frames using rocDecode API.

Wait for the decoding to finish.

Get the decoded YUV frame from amd-gpu context to HIP (using VAAPI-HIP interoperability under ROCm).

Run HIP kernels in the mapped YUV frame. For example, format conversion, scaling, object detection, classification, and others.

Release the decoded frame.

Note

YUV is a color space that represents images using luminance (Y) for brightness and two chrominance components (U and V) for color information.

The preceding steps are demonstrated in the sample applications located in our GitHub repository directory.