Test the RAG pipelines with Open-WebUI#

2025-09-26

3 min read time

After you’ve executed the ROCm-RAG pipelines, you can test them with Open-WebUI.

When your retrieval pipeline is up and running (make sure all components are ready by checking the logs),

you can access the Open-WebUI frontend by navigating to https://<Your deploy machine IP> or http://<Your deploy machine IP>:8080.

When you set up a new Open-WebUI account, your user data is saved to /rag-workspace/rocm-rag/external/open-webui/backend/data.

Set up and test pipelines with Open-WebUI#

To set up and test with Open-WebUI:

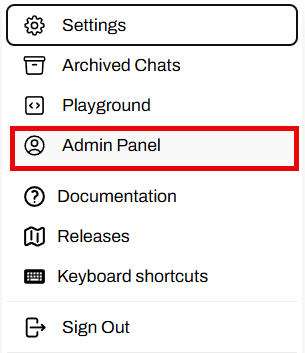

Go to the admin panel:

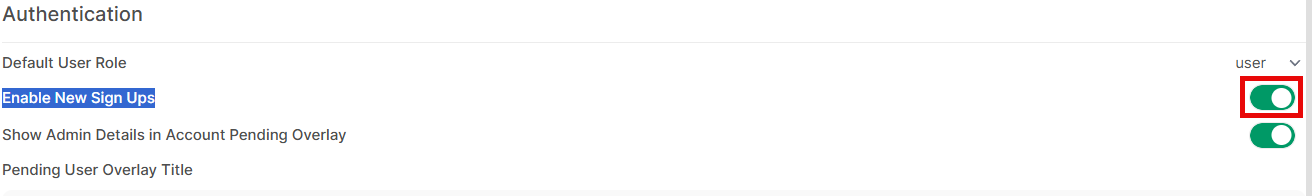

Select Enable User Sign Ups to allow new user registration. User data saves to

/rag-workspace/rocm-rag/external/open-webui/backend/data/webui.db:

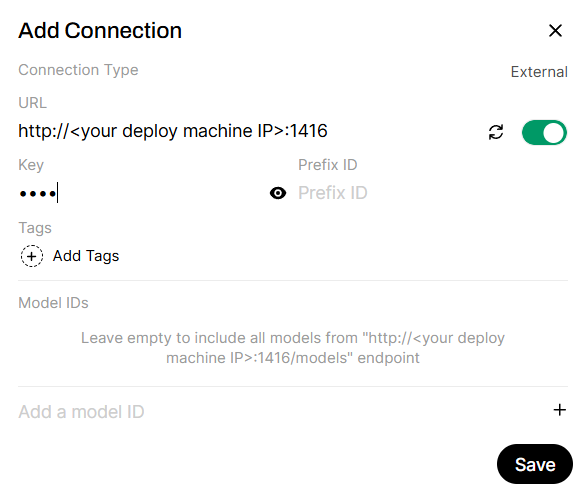

Add the APIs to the RAG server (by default, haystack is running on port

1416and langgraph is running on port20000):

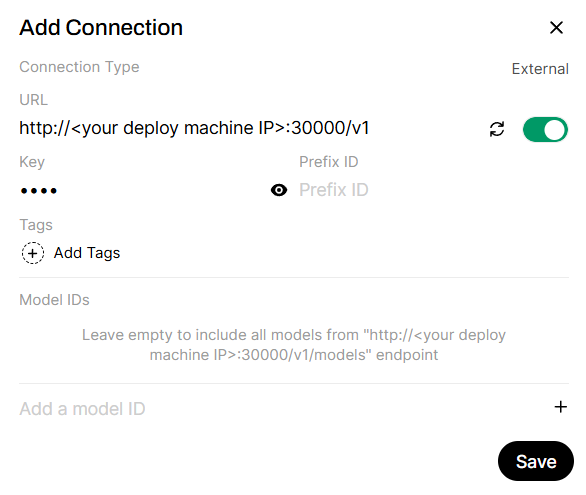

Add APIs to the example LLM server (if

ROCM_RAG_USE_EXAMPLE_LLM=True, otherwise replace this API URL with your inference server URL):

Here are the IPs used in this example:

http://<Your deploy machine IP>:1416 -> haystack server, provides ROCm-RAG-Haystack if Haystack is chosen as RAG framework http://<Your deploy machine IP>:20000/v1 -> langgraph server, provides ROCm-RAG-Langgraph if Langgraph is chosen as RAG framework http://<Your deploy machine IP>:30000/v1 -> Qwen/Qwen3-30B-A3B-Instruct-2507 inferencing server if ROCM_RAG_USE_EXAMPLE_LLM=True

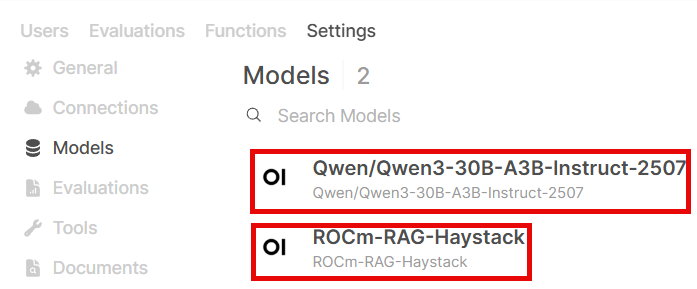

Here’s a list of models provided by these APIs. This is retrieved by calling

http GET API_URL:PORT/v1/models:

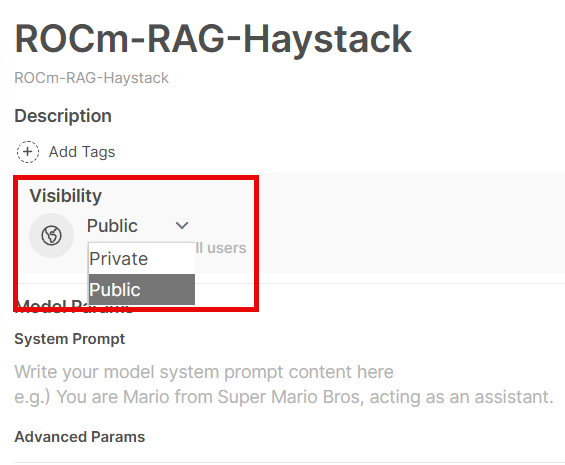

These are the model settings. By default, models are only accessible by admin. Make sure you share the model to public (all registered users) or private groups:

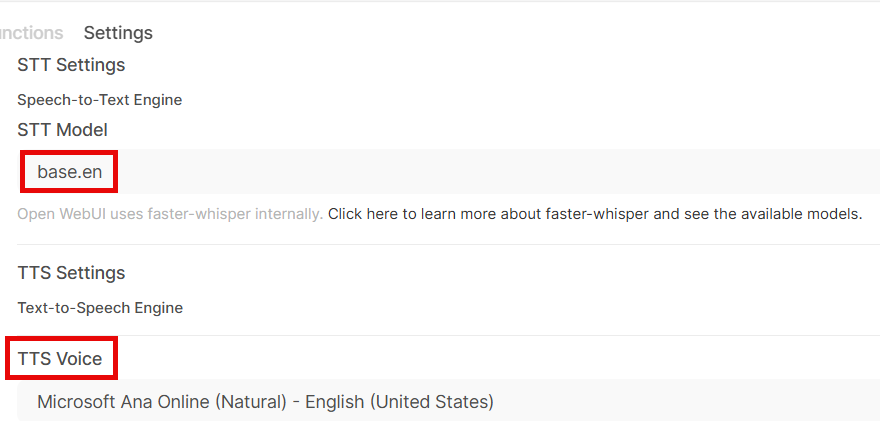

Select which fast whisper model (here’s a list of models provided by fast-whisper) and which TTS voice to use:

Comparing the RAG pipeline against direct LLM responses#

You can test the accuracy of the ROCm-RAG pipelines through direct LLM responses.

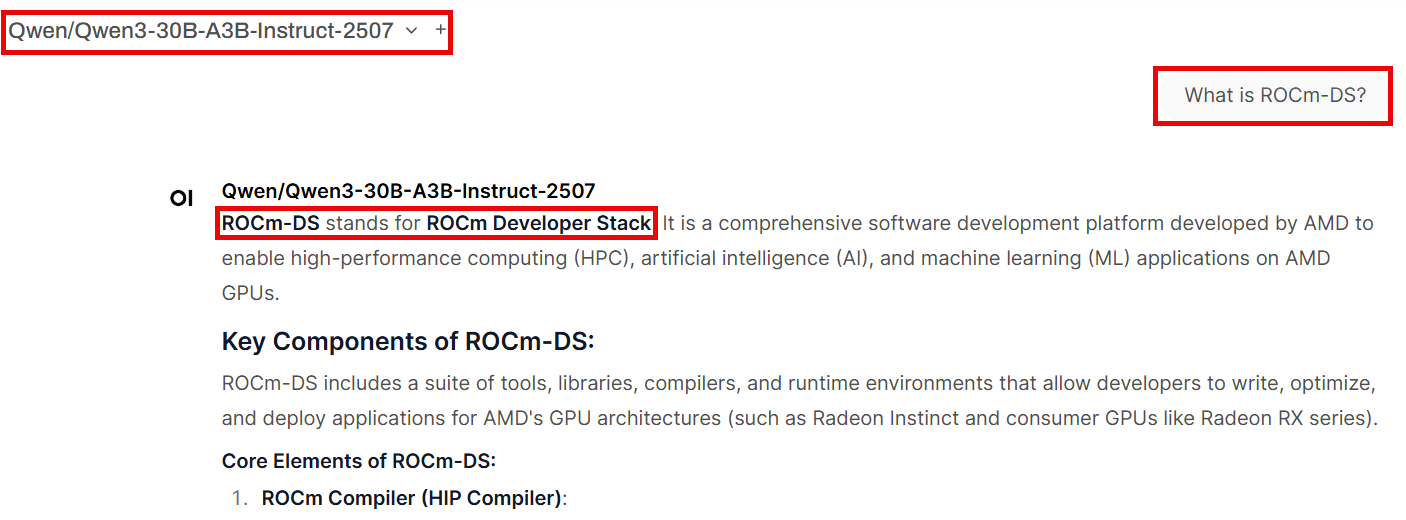

As an example, select Qwen/Qwen3-30B-A3B-Instruct-2507 from the model drop-down and ask: What is ROCm-DS?.

Since ROCm-DS is a newer GPU-accelerated data science toolkit by AMD, the direct LLM output may contain hallucinations:

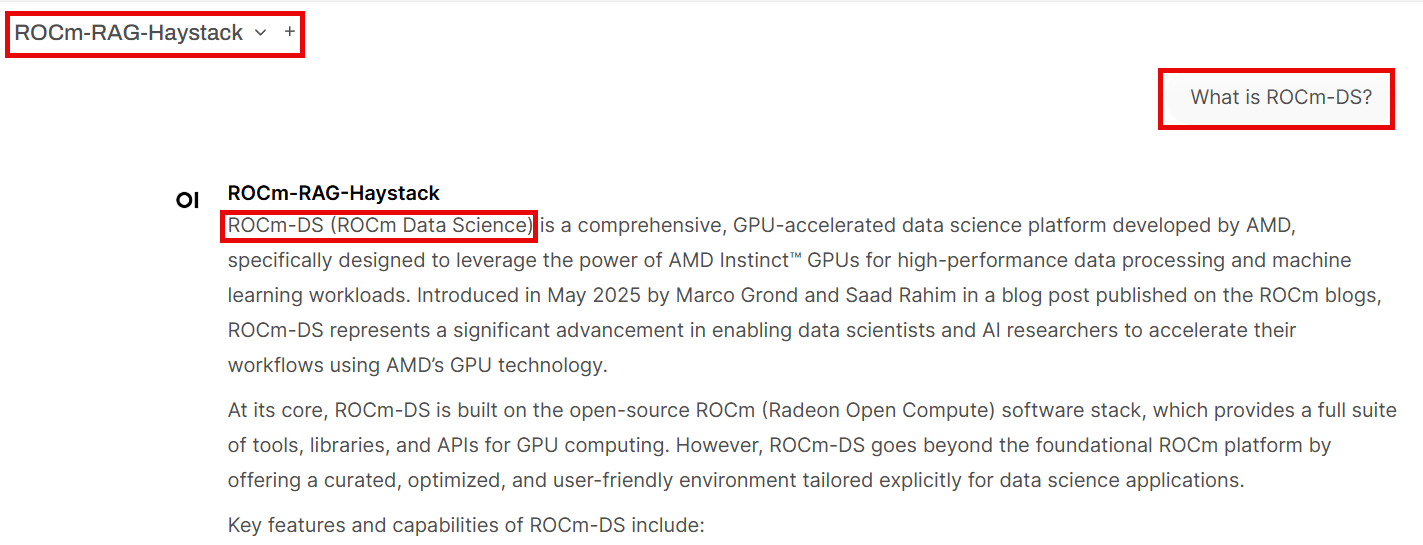

Then ask the LLM What is ROCm-DS? using a ROCm-RAG-* model. The RAG pipeline returns the correct definition of ROCm-DS, leveraging the knowledge base built in the previous steps:

This screenshot of the ROCm-DS blog page confirms that the correct definition of ROCm-DS refers to AMD’s GPU-accelerated data science toolkit: