Introduction to RDC API#

Note

This is the alpha version of RDC API and is subject to change without notice. The primary purpose of this API is to solicit feedback. AMD accepts no responsibility for any software breakage caused by API changes.

RDC API#

RDC API is the core library that provides all the RDC features.

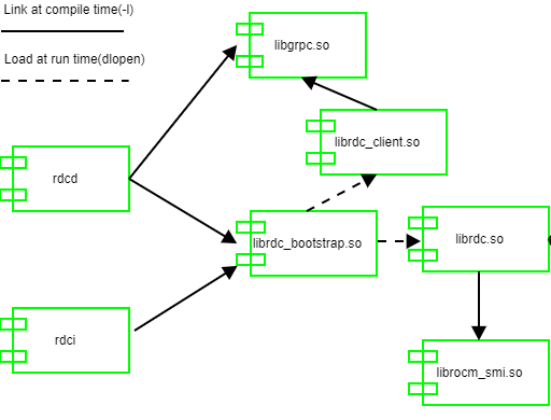

RDC API includes the following libraries:

librdc_bootstrap.so: Loads one of the following two libraries during runtime, depending on the mode.rdcimode: Loadslibrdc_client.sordcdmode: Loadslibrdc.so

librdc_client.so: Exposes RDC functionality usinggRPCclient.librdc.so: RDC API. This depends onlibamd_smi.so.libamd_smi.so: Stateless low overhead access to GPU data.

Fig. 5 Different libraries and how they are linked.#